Integrate Data in Snowflake for Seamless Analytics

When you think of companies and institutions creating data regularly, they rarely do it from one platform. All data from multiple sources must be strategically combined into a single data point that can be accessed to gain insights into the user base and optimize business operations. These insights are valuable to the decision-making process of organizations, but can only be achieved through proper data integration.

Raw data, when collected, is often an unstructured mess. Data warehouses and pipelines that store and build links within the data are essential if we want to achieve desired outcomes with the data we have acquired, especially so if we want to modify and understand it at a larger scale. Combining data seamlessly from dispersed sources to offer a single, unified view is a specialty of Snowflake, which is achieved through the Snowflake ETL integration.

What is Snowflake?

If the entirety of your company’s data warehouse could be stored digitally on the cloud, there would be no limits to storage capacity and retrieval. This is exactly the premise behind Snowflake’s data integration, which uses Amazon Web Services (AWS) and Azure to provide holistic cloud data warehousing solutions. Snowflake eliminates the necessity for selecting, installing, configuring, and maintaining software and hardware manually.

The resources that would otherwise go into installation, maintenance, and training would represent substantial cost savings for firms with extensive operations, hence, this alternative becomes inevitable. As an enterprise-wide data management and data governance solution, Snowflake lets you instantly exchange encrypted and secure data through an ETL solution of your choice.

Why Use Snowflake?

Efficiency

Long gone are the days of nightly batch loads for processing your collected data batches. Snowflake enables you to analyze complex data streams in real time, thus enhancing the quality of the data upon which your most critical decisions are based and the overall performance of your analytics process rests.

Cache

Running extensive queries and having to wait for their results can be both time-consuming and tiresome for your staff. Through the use of smart caching, Snowflake avoids regenerating already existing reports to quickly answer commonly occurring queries.

Transparency

The Snowflake architecture helps your company be data-accountable, ensuring access throughout the entire enterprise to gain valuable business insights that facilitate data-driven decisions on every level of the organization. This encompasses marketing, sales, finance, procurement, and even partner relations.

Customer-Centric

Snowflake gives you insight into customer behavior and how they use your product. One of the key tenets of data science is that customer satisfaction may be ensured by expanding product offerings based on precise customer requirements.

How an ETL loads and Integrates Data into Snowflake

ETL is an acronym that represents Extract, Transform, and Load. ETL allows data that can come from very different sources to be converted into compatible formats and uploaded onto a designated data warehouse. Sources of data can vary from flat files stored on media servers to third-party applications.

Snowflake ETL offers a powerful mechanism for businesses to upload and integrate their data into the Snowflake Data Warehouse using an ETL extension. This translates into the process of collecting useful data from your and your partners’ databases and achieving the required conversions to make the data ready for detailed analysis, and then transferring it into the Snowflake architecture.

What Factors Can Help You Choose an ETL?

Paid Versus Open-Source

The most important factor at play when looking for a suitable ETL extension for data integration is the cost involved in such an undertaking. While paid tools offer better interfaces and continued support, open-source alternatives are available free of cost with fewer changes over time.

Ease of Use

Some ETL software applications promise a great user experience, from a no-code drag-and-drop interface to an extremely streamlined process, while others will require programming knowledge to run SQL/Python scripts. Your decision will ultimately depend on your unique business requirements and what your team is more comfortable using.

Diverse Data Sources

Most ETLs are capable of dealing with many different variations of data sources, from complete apps to unstructured blobs of binaries. You can also incorporate several databases at once, depending on your business needs.

Data Conversion

Conversion techniques such as expression, aggregate, and joiner are incredibly useful in turning rudimentary data into organized, structured data for easy manipulation and querying.

Customer Service

Most enterprise solutions offer 24/7 customer service, where data problems are often handled through a support desk. To address concerns, emails, and phone numbers are also provided.

By carefully considering these factors, you can choose an ETL tool that meets your business needs and helps you efficiently manage your data processing tasks with the Snowflake platform.

Load and Integrate Data in Snowflake

Method 1: Third-Party ETLs

Hevo

The first choice on our list is Hevo Data, a no-code data pipeline solution whose primary purpose is to automatically replicate data in real-time from over a hundred supported data sources to channel it to data warehouses such as Snowflake. Hevo is built from the ground up to be prepared for experimentation, which makes it very design fault-proof. It enhances and converts the data in a way that does not necessitate professional coding expertise while retaining analytic usability.

Blendo

Blendo is most widely known as a data integration tool that simplifies the process of connecting diverse data sources to databases. It is built on natively supported and coded data connections that streamline the entire ELT integration process. Blendo specializes in faster BI insights and a COPY functionality that allows real time data transfer to Snowflake.

Their sync support includes Redshift, BigQuery, SQL, Snowflake, and Panoply. Blendo not only loads and integrates data into Snowflake, but also automates data management and conversion so it can become a hands-off process.

Matillion

Matillion is built from the ground up for supporting cloud data warehousing involving heavier loads. Suitable for Redshift, BigQuery, and Snowflake, it is considered a viable platform for most use cases including workflow orchestration, data integration, and executive decision-making. Matillion ETL emphasizes strong transformations and combinations that can solve and retain insights from even the most complex business logic problems. Matillion’s capacity to plan tasks based on the availability of the required resources offers additional benefits for users.

Streamsets

StreamSets Data Collector is a free-to-use software that lets you build complex data import pipelines for the ElasticSearch JSON-less Java library. These pipelines are capable of adapting to changes far more quickly than their alternatives, based on variables such as semantics, schema, and infrastructure. StreamSets offers two variants, one being a simple data collector and the other a comprehensive apache spark-based ETL known as the transformer.

How to Integrate ETL Tools with Snowflake?

Snowflake enables data integration and transformation both during and after loading (ELT). It is highly compatible with a variety of data integration technologies, as already discussed in the previous section, giving you the flexibility to scale up or down to meet peak loads. For the sake of this simplicity, we will be using the example of the HEVO platform to transfer Firebase Analytics data.

Step 1: To transfer your data from Firebase Analytics to the Snowflake platform, set up Firebase Analytics as a source by typing in your Firebase login credentials into the HEVO portal. In order for this setup to work, you must name your database and pipeline.

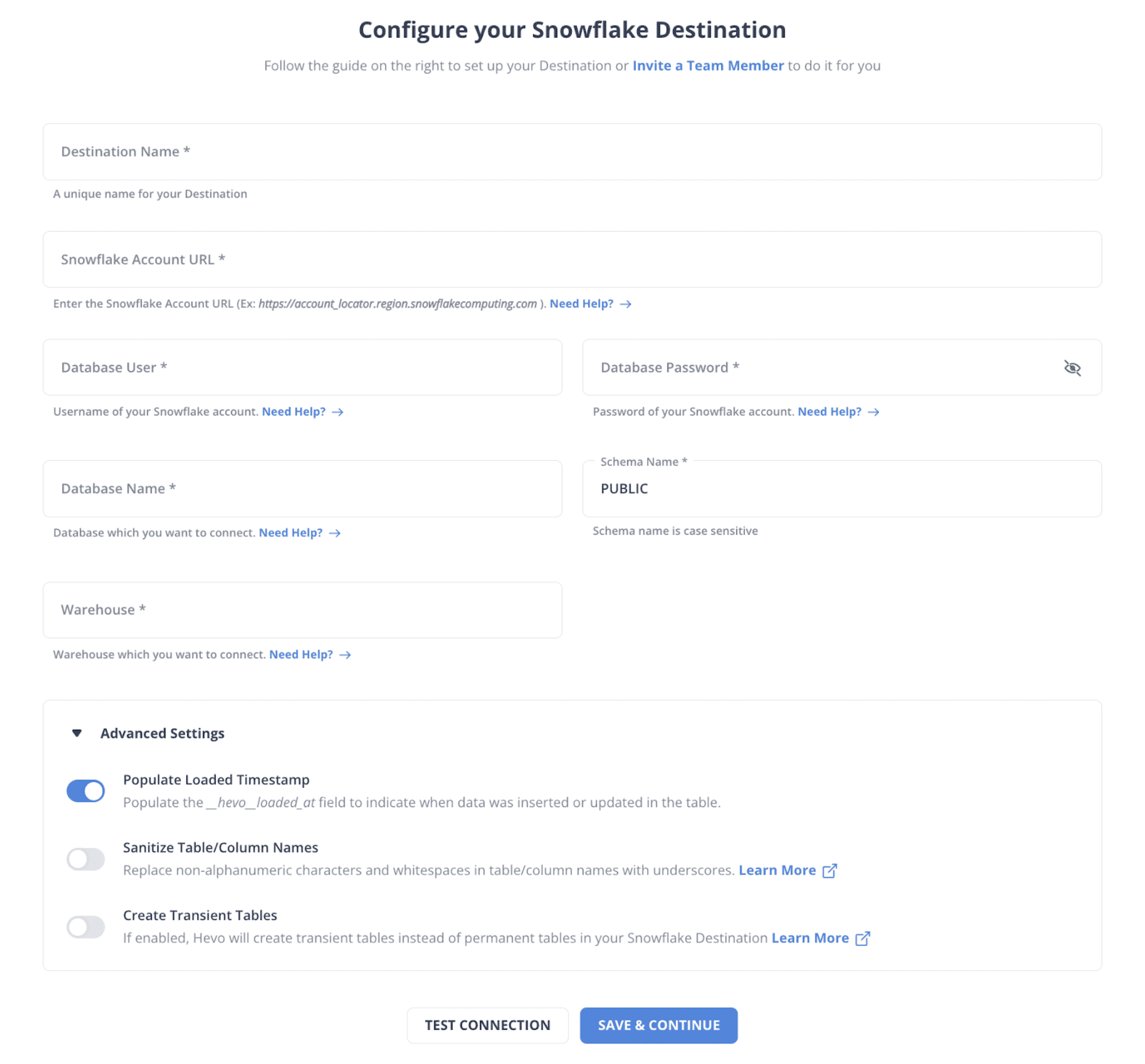

Step 2: The next step is to enter your Snowflake credentials into the HEVO platform. You will also be required to provide information about your particular data warehouse, its pertinent databases, schemas, and a unique name of your choice for this specific destination.

Source: HEVO

Method 2: Using CSV Files

How Does This Work?

CSV files can be exported from your chosen analytics software and imported directly into Snowflake.

What are the Steps Involved?

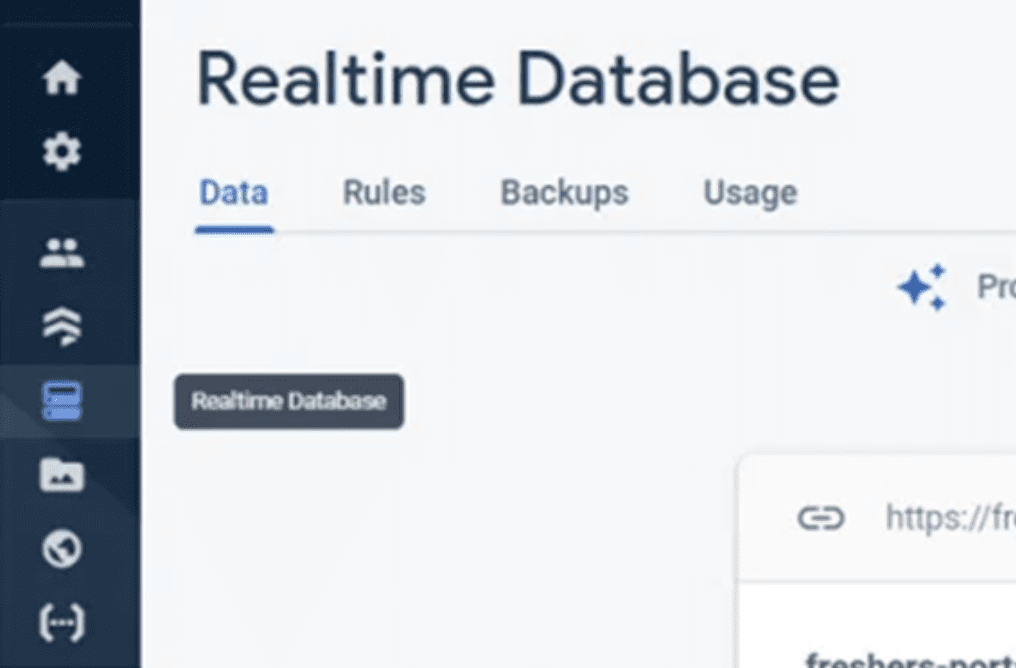

Step 1: Make sure that you have a valid Firebase account and are already logged in. Go to your project next and enter the real-time database module.

Source: HEVO

Step 2: Use the three dots in the upper-right corner to access the drop-down menu.

Step 3: From this dropdown menu, select the ‘Export JSON’ button.

Step 4: At this point, your file should have automatically begun to download. Using the read JSON function, you can easily convert the JSON file format into a CSV file.

Step 5: Execute the ‘Load Data Wizard’ next directly in the Snowflake dashboard. In the user interface, click on ‘Data’, and then the ‘Databases’ section, where you should be able to find the Wizard.

Step 6: From the list of provided options, select the variant of the data warehouse that best fits your needs, and then proceed to import your files.

Step 7: You can select your files from a variety of sources. For example, you can use any of the partitioned drives on your system but also upload from cloud solutions such as Microsoft Azure, Amazon S3, or Google One Cloud Storage solutions.

Limitations to Manual Entry

Some businesses may prefer to use manual exporting methods for data loading and integration in Snowflake, or even implement an API to streamline the process. The major problem here is that integrating data into Snowflake using the manual approach, as compared to a third-party integrated ETL extension, is that you simply cannot work with real-time data.

Maintaining APIs requires teams with substantial development expertise, training, and diverse technical skill sets. Depending on the speed of the nightly batch loads, real-time data minimizes the likelihood of falling behind on changes occurring within the organization.

The Bottom Line

Snowflake is a terrific cloud warehousing solution that enables corporations to store and transform their data resources in order to gain actionable insights and solve their biggest challenges.

By combining the functionalities of both Snowflake architecture and third-party data integration solutions, businesses can not only incorporate disparate information into a single, cohesive source, but also maximize their data engineering resources to automate processes, streamline operations, and benefit from improved scalability.

Are you looking for reliable and cost-effective data analytics solutions for your own business? At RTS Labs, we make software that gives you an unfair advantage. Our elite cross-functional teams bring you the agility of a startup and the scalability of an industry leader.