- Neural networks are becoming more transparent with techniques like LRP and SHAP addressing the “black box” problem.

- Next-gen architectures like CNNs and transformers allow AI to process complex data efficiently.

- Ethical AI development focuses on reducing bias and improving fairness in critical fields.

Neural networks have reshaped the landscape of artificial intelligence (AI), offering immense potential in fields ranging from healthcare to finance. However, despite their capabilities, traditional neural networks are often referred to as “black boxes.” This term highlights the opacity of their inner workings, as these models produce outcomes that are difficult to explain or interpret. While they can achieve high levels of accuracy, understanding how they arrive at decisions remains a challenge.

The next generation of neural networks seeks to address this issue by improving transparency, scalability, and real-world applications. This article will explore these advancements, offering a deeper look into how the new approaches are breaking down the “black box” while driving AI progress.

1. What Are Neural Networks?

Neural networks are foundational to AI and machine learning, modeled after the structure of the human brain. They consist of layers of interconnected nodes (often referred to as neurons), which process data and extract patterns from complex datasets.

A typical neural network consists of three main types of layers:

- Input layer: The data enters the network through this layer. Each input node represents a feature of the data.

- Hidden layers: The magic happens here. These layers apply various mathematical operations to detect patterns, identify relationships, and make predictions.

- Output layer: This layer provides the final result, such as a prediction, classification, or decision.

Each connection between neurons is assigned a weight, and during training, these weights are adjusted to minimize error. Neural networks have shown remarkable success in tasks such as image recognition, speech processing, and natural language understanding.

For example, deep learning models like Convolutional Neural Networks (CNNs) have been instrumental in identifying objects within images, while Recurrent Neural Networks (RNNs) have played a key role in processing sequential data, such as text or time-series data.

2. The Concept of the “Black Box” Problem in Deep Learning

While neural networks have achieved incredible success, they also come with significant challenges. The “black box” problem refers to the difficulty in understanding how these networks arrive at their decisions. Due to the complexity of the mathematical operations involved in each layer, even researchers often struggle to explain why a model made a particular prediction.

This lack of transparency poses several risks:

- Accountability: In high-stakes fields like healthcare or finance, where AI is used to diagnose patients or determine loan eligibility, understanding how decisions are made is critical.

- Bias and fairness: Without visibility into the decision-making process, it’s hard to ensure that AI models aren’t perpetuating biases hidden in the data.

- Debugging: When an AI model produces incorrect results, it’s difficult to trace the error back to a specific layer or weight in the network, making troubleshooting time-consuming.

Real-world examples illustrate this issue. For instance, AI models used in hiring have been found to discriminate against certain groups based on biased training data, yet the exact reasoning behind the model’s decisions remains unclear. This lack of transparency not only impacts trust but also slows the adoption of AI in sensitive areas.

3. Innovations in Neural Networks: Improving Transparency and Explainability

In response to the challenges posed by the “black box” problem, researchers have developed techniques to make neural networks more interpretable and transparent. These methods offer insights into how a model arrives at its decisions, providing both developers and users with a better understanding of the process.

Some of the most promising techniques include:

- Layer-wise Relevance Propagation (LRP): LRP is a technique that assigns relevance scores to each feature in the input data, allowing users to see which features contributed most to a particular decision. This is particularly useful in fields like medical imaging, where understanding which pixels in an X-ray influenced a diagnosis can help doctors trust AI-based tools.

- Attention Mechanisms: Attention mechanisms, used in models like Transformers, allow the network to focus on specific parts of the input data when making predictions. This provides insights into which words or phrases were most important in a text classification task, improving interpretability.

- SHAP (SHapley Additive exPlanations): SHAP values offer a unified framework for interpreting model output by attributing the contribution of each input feature to the overall prediction. This method has been widely adopted in sectors such as finance, where it’s crucial to understand why certain decisions were made.

These advancements not only enhance trust in AI systems but also ensure compliance with industry regulations that require models to be explainable, especially in fields like healthcare and finance. By improving transparency, these innovations are helping to make AI more responsible and trustworthy.

4. Scaling Neural Networks: Next-Generation Architectures and Techniques

As neural networks become more widely adopted, the demand for scalability has grown. Training larger models that can handle vast datasets or more complex problems requires not only computational power but also innovative architectures that maximize efficiency. Next-generation neural networks have introduced several techniques that allow AI models to scale effectively.

Some of the most notable advancements include:

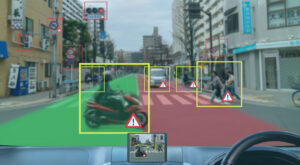

- Convolutional Neural Networks (CNNs): CNNs are designed for image processing and are structured to reduce the number of parameters in a network, making them both faster and more efficient. These networks have become the backbone of computer vision applications like facial recognition, object detection, and autonomous driving.

- Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM): RNNs are tailored for sequential data processing, making them ideal for tasks such as language modeling and time-series forecasting. LSTMs, a subtype of RNNs, address the problem of long-term dependencies, allowing models to “remember” information from earlier in the sequence.

- Transformers: Originally developed for natural language processing, transformers have gained popularity due to their ability to handle massive datasets. These networks utilize attention mechanisms to process entire input sequences at once, making them highly efficient for tasks such as language translation and text generation.

In addition to these architectures, researchers are exploring new models like capsule networks and graph neural networks, which allow for more nuanced and context-aware decision-making. The combination of architectural advancements and improvements in hardware—such as GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units)—has paved the way for increasingly sophisticated AI systems.

5. Real-World Applications of Next-Generation Neural Networks

The next generation of neural networks has brought about significant improvements in various industries. From healthcare to entertainment, the ability to process complex data and deliver accurate results is transforming operations and decision-making.

Healthcare

AI-driven diagnostic tools, powered by neural networks, have shown great promise in identifying diseases such as cancer, heart conditions, and eye disorders. By analyzing medical images, these systems can detect abnormalities with a level of precision that often rivals human experts. In addition, AI is being used to develop personalized treatment plans by analyzing patient history and genetic information.

Autonomous Systems

Self-driving cars and drones are among the most exciting applications of neural networks. These systems rely on deep learning models to process visual and sensor data in real-time, enabling them to make decisions on the fly. By continually improving their understanding of their environment, these systems are moving closer to full autonomy.

Natural Language Processing (NLP)

Neural networks have significantly advanced language-based applications such as chatbots, virtual assistants, and translation services. Models like GPT-4o can generate human-like text, perform sentiment analysis, and engage in meaningful conversations with users. These applications have become critical in customer service, education, and business communication.

Gaming and Entertainment

In the entertainment industry, neural networks are being used to design video games that respond dynamically to player behavior. AI-generated characters can adapt to new situations, creating more immersive gaming experiences. Similarly, in film production, neural networks assist in generating realistic visual effects and animations.

These applications are just a few examples of how next-generation neural networks are driving advancements across industries, reshaping the way tasks are performed.

6. The Role of Neural Networks in Ethical AI Development

As AI becomes more prevalent, ethical considerations are more important than ever. Neural networks, like other AI technologies, are not immune to bias or ethical dilemmas. For instance, AI models trained on biased data can reinforce existing inequalities in areas like hiring or lending. As a result, the next generation of neural networks is focusing on addressing these issues by integrating fairness and accountability into the design.

One approach to tackling bias involves fairness metrics—algorithms that measure and mitigate bias during training. Additionally, transparency tools such as LRP and SHAP not only improve explainability but also help developers identify potential sources of bias within the models.

Governments and regulatory bodies are also placing increased emphasis on ensuring AI models meet ethical standards. The European Union’s AI regulations, for example, aim to enforce strict transparency and fairness requirements for AI systems used in high-risk applications, including healthcare and criminal justice.

7. The Future of Neural Networks: Beyond Deep Learning

Neural networks have come a long way, but they are far from reaching their full potential. Emerging technologies are likely to push the boundaries of AI even further in the years to come.

Neuromorphic Computing

Neuromorphic computing seeks to mimic the structure and function of the human brain more closely than traditional neural networks. By designing hardware that operates like neural circuits, neuromorphic systems could deliver faster, more efficient processing for AI tasks, particularly in real-time applications.

Quantum Computing

Quantum computing promises to revolutionize neural networks by solving problems that are too complex for classical computers. With its ability to process multiple possibilities simultaneously, quantum computing could drastically reduce the time it takes to train AI models, enabling breakthroughs in fields like drug discovery and climate modeling.

Advances in Learning Techniques

Finally, advancements in reinforcement learning, unsupervised learning, and generative models are poised to expand the capabilities of neural networks. These techniques will allow AI systems to learn from fewer labeled examples, adapt to new environments without constant retraining, and generate creative solutions to complex problems.

Final thoughts: The Next Generation of Neural Networks

The next generation of neural networks is opening the “black box” of deep learning, offering greater transparency, scalability, and real-world impact. Through innovations in explainability, architecture, and ethical AI practices, these models are becoming more powerful and trustworthy. As new technologies like neuromorphic computing and quantum computing continue to emerge, neural networks will only grow more sophisticated, shaping the future of artificial intelligence.

People Also Ask:

- What are neural networks, and how do they work?

Neural networks are AI models that process data by mimicking the structure of the human brain. They consist of layers of interconnected neurons that perform calculations and detect patterns in the data. - Why are traditional neural networks called “black boxes”?

They are called “black boxes” because it’s difficult to interpret how these models make decisions due to the complexity of their internal processes. - What innovations are improving the transparency of neural networks?

Techniques like Layer-wise Relevance Propagation (LRP) and SHAP are helping make neural network decisions more explainable, offering insights into how predictions are made. - How are neural networks being used in industries today?

They are widely used in healthcare for diagnostics, in autonomous systems like self-driving cars, and in language processing for applications like chatbots and translation services. - What does the future hold for neural networks?

Emerging technologies like neuromorphic computing and quantum computing will push the boundaries of neural networks, making them faster and more efficient for advanced applications.

Further Reading

For those looking to deepen their understanding of neural networks and the evolving field of deep learning, the following books provide a comprehensive exploration of both foundational concepts and cutting-edge advancements. Whether you’re a researcher, developer, or simply curious about AI, these resources will guide you through the complexities of neural networks and offer practical insights into their real-world applications.

Deep Learning by Ian Goodfellow, Yoshua Bengio, and Aaron Courville

This is one of the most comprehensive books on deep learning and neural networks, covering the fundamental concepts and practical applications. It’s highly suitable for those who want a deep dive into the theory behind neural networks.

Neural Networks and Deep Learning: A Textbook by Charu C. Aggarwal

This book offers a detailed overview of both classical and modern models in deep learning, emphasizing the algorithms and theoretical foundations that are crucial for understanding neural networks.

Deep Learning with Python by François Chollet

This book is a great resource for developers looking to implement neural networks using Python. It explains key concepts in a practical way and includes hands-on examples using Keras and TensorFlow.