AI adoption is booming, but scaling it remains a steep climb. Research shows that only around 10% of AI projects ever move beyond pilot stages. Most stall due to fragmented data, unclear ROI, or lack of organizational alignment. Moving from experimentation to enterprise-wide impact requires more than advanced models; it calls for strategy, structure, and change management.

This guide explores how to scale AI in your organization, step by step, and how RTS Labs helps businesses build the right foundation to make AI truly work at scale.

Why Scaling AI Matters More Than Ever

Once seen as a novelty, AI is now a fundamental part of modern business operations. According to McKinsey’s 2025 State of AI survey, 78% of organizations now use AI in at least one business function, up from 55% just a year earlier. But adoption is just the first step, few see it translate into enterprise-wide impact.

Here’s how scaling AI delivers real value:

1. Growing Usage But Limited Bottom-Line Impact

While deployment is accelerating, few companies are seeing broad financial impact. Over 80% of firms report no meaningful impact on enterprise-level EBIT from generative AI so far.

Only 17% say that 5% or more of their EBIT in the past 12 months is attributable to gen AI. The numbers make one thing clear, without scaling, pilots will never translate into business results.

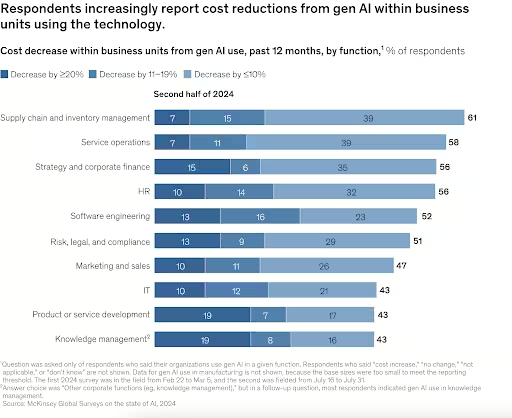

2. Cost Reductions and Efficiency Gains at Function Level

Within individual business units using generative AI, a majority now report cost reductions. Even though the savings haven’t fully aggregated at the enterprise level, they show that scaling can progressively knit localized wins into organization-wide efficiency.

3. Functional Expansion and Multi-Domain Penetration

Companies are bringing AI into operations, finance, and customer service, and not limiting it to IT. The survey notes that AI is increasingly used in marketing, sales, service operations, product development, and knowledge management.

On average, organizations report using AI in three business functions. This multi-domain presence is a necessary precursor to cross-functional scaling.

4. Workflow Redesign Is a Key Differentiator

Of the many practices organizations can adopt, fundamentally redesigning workflows shows one of the strongest correlations to EBIT impact. In fact, among 25 attributes evaluated, McKinsey finds that workflow redesign has one of the largest effects on value realization. Scaling success depends not on more AI, but on redesigning the way work functions.

5. Leadership & Governance Make a Big Difference

The survey highlights that when CEOs directly oversee AI governance, enterprises report higher bottom-line impact. Also, tracking KPIs, defining roadmaps, embedding adoption practices, and internal communications are strongly correlated with value capture.

Common Challenges in Scaling AI

According to Intercom’s Customer Service Trends Report 2024, 87% of leaders say customer and internal expectations have risen sharply due to AI adoption, making the road to scale even steeper.

Here are five of the biggest challenges organizations face on that journey.

1. Siloed Pilots That Don’t Connect to the Bigger Picture

Many companies start with small AI projects in individual departments, fraud detection here, chatbot automation there, but those pilots often remain trapped in silos. Without shared infrastructure or governance, insights can’t move across business functions. Such fragmentation limits organizations’ ability to realize network effects or measure collective ROI.

2. Data That Isn’t Ready for Scale

Data is the foundation of any scalable AI system, yet it’s where most initiatives stall. Inconsistent data formats, incomplete records, and a lack of governance create noise instead of insight. When every department defines “customer” or “performance” differently, even the most advanced model can’t operate effectively.

3. Workforce Misalignment and Change Fatigue

AI’s introduction often triggers uncertainty. Intercom reports that while 69% of executives believe AI is reshaping job roles, only 34% of employees agree; a clear perception gap that can lead to quiet resistance.Teams that feel left out of the transition are less likely to embrace new systems. Real scaling requires cultural alignment: transparent communication, retraining, and inclusion in design and rollout processes.

4. Governance Gaps and Compliance Risks

As AI spreads into regulated workflows, from underwriting to customer service, the risks of bias, privacy breaches, and opaque decisioning grow. Many organizations lack standardized frameworks for oversight, model documentation, or bias monitoring.

That makes it difficult to scale responsibly or win stakeholder trust. The companies that succeed are those that treat governance as a core design principle, not an afterthought.

5. Misaligned Metrics and Undefined ROI

It’s hard to scale what you can’t measure. Many AI initiatives focus on model accuracy or automation rates, but those don’t always translate to business outcomes.

For example, Intercom found that 25% of service teams struggle to measure the right performance metrics in AI-assisted workflows, and the same applies to AI programs more broadly.

True scaling requires redefining KPIs around business impact: revenue growth, cost savings, and experience improvement.

The Building Blocks of Scalable AI

Scaling AI starts with architecture. The organizations that move beyond pilots do so because they invest in the right foundations: reliable data, repeatable infrastructure, and teams that know how to adapt.

Each represents a strategic building block that determines how AI moves from experimentation to business advantage.

1. A Unified, High-Quality Data Foundation

Data is the lifeblood of AI, yet most enterprises still operate with fragmented, inconsistent data streams. Scalable AI requires a centralized data ecosystem that combines quality, accessibility, and governance.

That means clear data ownership, standardized definitions, and automated validation pipelines that keep information reliable.

2. A Strategic Model Approach: Custom, Hybrid, and Responsible

Not every challenge needs a large language model (LLM). Some demand smaller, domain-specific algorithms. The most effective enterprises use a hybrid model strategy. They blend custom ML models for proprietary use cases with pre-trained LLMs for generative or language-based tasks.

3. Scalable Infrastructure and MLOps Pipelines

Without strong infrastructure, even the smartest model will stall. Scalable AI depends on MLOps, continuous integration, deployment, and monitoring pipelines that keep models accurate and up to date. Automation ensures that updates, retraining, and performance checks happen seamlessly, reducing operational risk.

4. Governance, Ethics, and Risk Management

Scaling AI responsibly means knowing how it makes decisions. Governance frameworks bring transparency, accountability, and compliance into the process. Whether it’s GDPR, HIPAA, or internal audit requirements, every model needs documentation, testing, and bias evaluation.

5. Change Management and Workforce Enablement

Technology alone doesn’t scale, people do. Organizations that succeed invest in training, communication, and cultural readiness. Employees must see AI not as a threat, but as a co-pilot for better decisions.

We support this through AI literacy programs, hands-on workshops, and user adoption plans that help teams build trust in new systems. When teams understand the “why” behind AI, adoption accelerates and value compounds.

Case in Point: Transforming Data into Business Insights

A leading pharmaceutical company relied on a third-party analytics vendor that couldn’t provide timely, trustworthy data. Teams struggled to make confident business decisions, and growth was stalling.

We partnered with the client to build a scalable, cloud-based data platform that unified fragmented sources and enforced consistent business logic. The new system automated data ingestion, quality checks, and governance workflows, giving every department access to accurate, real-time insights.

Within months, the company replaced its inefficient analytics vendor, increased market share by 19%, and drastically reduced the time needed to onboard new data sources.

How to Scale AI in Your Organization (Step-by-Step Roadmap)

Here’s a practical roadmap leaders can follow to move from experimentation to enterprise-scale success.

1. Set Clear Business Objectives

Start with the “why.” Define outcomes that matter, higher efficiency, faster decision-making, new revenue streams, before picking a model or technology. AI initiatives that tie directly to measurable business goals are far more likely to scale successfully.

2. Assess AI Readiness

Before scaling, audit your organization’s AI readiness across three areas: data quality, infrastructure maturity, and team capability. Identify what’s production-ready versus what needs rebuilding. We conduct readiness assessments that highlight gaps in governance, data pipelines, and skill sets, providing a clear roadmap to move forward with confidence.

3. Prioritize High-Impact Use Cases

Start with use cases that align closely with business priorities and deliver measurable ROI. Examples include fraud detection in financial services, claims automation in insurance, and demand forecasting in logistics.

4. Build Scalable Infrastructure and MLOps Pipelines

Once the right use cases are defined, create the foundation that supports continuous model deployment, monitoring, and improvement. MLOps ensures AI systems stay reliable and compliant as they scale.

5. Adopt a Hybrid Model Strategy

Scaling often involves balancing flexibility with speed. Combine custom models built on proprietary data with pre-trained LLMs for tasks like document summarization, text analysis, or customer engagement.

6. Embed Governance and Compliance Early

Don’t wait until production to think about risk. Build explainability, audit trails, and access controls into your AI stack from the start.

7. Train and Upskill Employees

Scaling AI requires human adoption as much as technical sophistication. Even the most advanced models will fail to deliver impact if teams don’t understand how to work alongside them. Employees need clarity on how AI fits into their day-to-day roles, what decisions it can support, and where human judgment remains essential.

Training and internal communication should focus on building confidence, not compliance, helping teams see AI as a trusted collaborator rather than a threat. When people feel equipped and informed, adoption accelerates naturally.

8. Foster Cross-Functional Collaboration

Scaling AI succeeds only when people embrace it. Employees need to understand how AI fits into their roles, what decisions it supports, and where human judgment still matters. Clear communication and hands-on training build confidence and turn hesitation into adoption.

9. Measure, Optimize, and Expand

Scaling does not end with deployment. Organizations need to track business KPIs, evaluate model performance, and refine systems based on real-world outcomes. Continuous measurement helps identify what works, what needs adjustment, and where AI can expand into new areas of the business.

LLMs and the Future of Enterprise AI Scaling

Large Language Models are reshaping how organizations think about scaling AI. They can process vast amounts of unstructured data, automate reasoning tasks, and accelerate insights across departments that previously relied on manual knowledge work.

When implemented responsibly, LLMs can turn disconnected data and documents into dynamic, searchable intelligence.

1. The Shift from Task Automation to Knowledge Automation

Traditional AI models excel at specific, rule-based tasks such as classification or prediction. LLMs, however, enable a new layer of intelligence, understanding, summarizing, and generating human-like text. Enterprises are using them for knowledge management, document analysis, and customer engagement, allowing teams to retrieve and apply insights instantly instead of searching across silos.

2. Measurable Impact on Productivity

Early adopters are already seeing tangible benefits. McKinsey’s State of AI 2025 report found that enterprises strategically adopting LLMs can reduce knowledge-worker tasks by up to 30–40%. This shift allows employees to focus on higher-value decision-making, creativity, and innovation rather than repetitive research or data entry.

3. Balancing Potential with Risk

As LLMs expand their footprint, new challenges arise: hallucinations, data privacy, compliance risks, and lack of explainability. Without proper oversight, even a well-trained model can produce misleading results.

Organizations should embed guardrails, validation workflows, and human-in-the-loop review to maintain accuracy and accountability.

4. The Hybrid Approach to Enterprise Scale

The future of AI scaling lies in combining the breadth of LLMs with the precision of structured analytics. Enterprises are increasingly adopting hybrid architectures, where LLMs handle language-driven tasks and traditional models manage numerical or predictive workloads. This approach blends creativity with control, maximizing both innovation and reliability.

5. Building a Future-Ready AI Stack

To stay competitive, organizations need AI systems that can evolve. LLMs will play a key role in powering AI copilots, decision-intelligence systems, and multi-agent workflows, all of which depend on scalable, secure, and well-governed foundations. Investing now in flexible architecture and governance ensures enterprises can adopt these innovations smoothly as they mature.

Best Practices for Scaling AI Successfully

Scaling AI is not about deploying more models; it’s about creating systems that grow, adapt, and continue to deliver value as your organization evolves. The most successful enterprises treat scaling as a continuous process of learning, governance, and improvement.

1. Align AI Initiatives with Business Goals

Start every project with a business outcome in mind. Define how success will be measured — whether it’s reducing costs, improving customer satisfaction, or speeding up decision-making. AI that ties directly to business KPIs is easier to justify, fund, and expand.

2. Build Governance Into Every Stage

Responsible scaling depends on consistent governance. Establish clear policies for data privacy, bias detection, and model explainability before deployment, not after. Regular audits and transparent documentation maintain trust with both customers and regulators.

Suggested read: AI Compliance Monitoring: Benefits, Use Cases, Steps (2025)

3. Create a Culture of Continuous Learning

AI is never static. Encourage experimentation and cross-team knowledge sharing. Teams that are empowered to test, learn, and iterate are far better equipped to adapt as technology and business priorities evolve.

4. Balance Speed with Control

Move fast, but not recklessly. Automation and prebuilt tools can accelerate progress, but every new integration should be validated for accuracy and compliance. Scaling sustainably means finding equilibrium between agility and oversight.

5. Invest in People as Much as Technology

Training and communication are as vital as infrastructure. Help employees understand how AI supports their work, and provide opportunities to reskill and specialize. When people see AI as an ally, adoption becomes natural rather than forced.

6. Measure and Communicate Impact

Consistent performance tracking closes the loop. Quantify outcomes in terms executives care about, ROI, efficiency gains, customer satisfaction, or revenue growth. Sharing those results across the organization reinforces trust and fuels momentum for continued scaling.

Prebuilt AI Tools vs. Custom AI Scaling Solutions

When scaling AI, organizations must choose between prebuilt tools and custom solutions. Both offer value, but they serve different needs and timelines. The right choice depends on your goals, data complexity, and appetite for control.

Prebuilt AI Tools: Speed with Trade-offs

Prebuilt platforms deliver quick results for common tasks such as chatbots or document processing. They are easy to deploy and maintain but offer limited customization and control. Over time, teams may face integration issues or vendor constraints around data privacy and transparency.

Custom AI Solutions: Flexibility for the Long Term

Custom AI requires more upfront effort but provides lasting value. These solutions align with specific data, workflows, and compliance needs, offering full ownership and scalability. They evolve with the business and deliver stronger ROI over time.

Finding the Balance

Many organizations adopt a hybrid approach, using prebuilt tools for fast deployment while developing custom systems for core or regulated operations. The goal is to balance speed with flexibility and ensure AI scales in a way that fits the organization’s strategy.

| Factor | Prebuilt AI Tools | Custom AI Solutions |

|---|---|---|

| Deployment Speed | Fast (days to weeks) | Moderate (weeks to months) |

| Flexibility | Limited configuration | Fully customizable |

| Integration | Works best in standard environments | Tailored to enterprise systems |

| Data Privacy & Control | Dependent on vendor | Full internal ownership |

| Long-Term ROI | Moderate | High |

| Scalability | Restricted by tool features | Designed for growth |

Why Partner with RTS Labs to Scale AI

Scaling AI takes more than technology. It requires a solid foundation built on trust, strategy, and measurable outcomes. We help organizations move beyond pilots to deploy AI that delivers lasting business value.

Our approach begins with clear alignment to business goals. We focus on improving operations, enhancing customer experience, and enabling faster, data-driven decisions. By combining data engineering, AI strategy, and custom development, we build systems that scale responsibly and securely.

We also focus on people. Successful scaling happens when employees understand and trust the tools they use. Through training and adoption programs, we help teams integrate AI confidently into daily work.

Looking ahead, we are preparing organizations for the next wave of enterprise AI, from copilots and decision-intelligence systems to multi-agent architectures that redefine how businesses operate.

Ready to move beyond pilots?

Let’s scale AI across your organization and build a future that is more intelligent, efficient, and adaptable. Contact us today!

FAQs-

1. What does it mean to scale AI in an organization?

Scaling AI means expanding its use beyond pilot projects so it becomes part of everyday operations. It involves integrating AI across teams, processes, and systems to drive measurable business outcomes such as efficiency, cost reduction, and faster decision-making.

2. Why do most AI initiatives fail to scale?

Many initiatives fail because organizations underestimate the importance of data readiness, governance, and workforce alignment. Without a unified data foundation or executive sponsorship, AI projects often stay siloed and never reach enterprise impact.

3. How can companies ensure responsible AI scaling?

Responsible scaling requires clear governance frameworks, transparent model documentation, and human oversight. Organizations should monitor AI systems for bias, accuracy, and compliance, and regularly update their models as business needs evolve.

4. What role do Large Language Models (LLMs) play in scaling AI?

LLMs extend AI’s reach into knowledge-driven tasks such as document analysis, customer communication, and decision support. They allow organizations to process and generate information faster, making AI useful across more functions.