A failed AI automation implementation rarely fails loudly. It fails quietly through stalled pilots, brittle workflows, and systems that technically work but never change how decisions are made.

Beyond sunk technology costs, failed implementations quietly drain productivity, erode trust in AI programs, and slow future transformation efforts. Organizations that experience early AI failures are significantly less likely to secure executive buy-in for subsequent initiatives, even when the underlying technology improves. (MIT Sloan research)

The issue isn’t that AI automation doesn’t work. It’s that AI automation implementation is often approached with the wrong mental model. Too many organizations treat AI automation as an incremental upgrade to RPA or workflow tools. In reality, AI automation introduces systems that learn, adapt, and make decisions, which fundamentally changes how automation must be designed, governed, and measured.

This article provides a practical, enterprise-tested framework for implementing AI automation correctly, while clarifying when off-the-shelf platforms fall short and where custom implementations, such as those from RTS Labs, deliver long-term value.

What Is AI Automation Implementation?

AI automation implementation doesn’t mean deploying the tool itself. It is the structured process of designing, integrating, deploying, and governing AI-driven systems that can automate decisions, workflows, and actions across the enterprise.

This distinction matters because AI behaves very differently from traditional automation. While RPA executes predefined rules, AI-driven automation systems operate in probabilistic environments. They rely on data quality, model performance, contextual judgment, and other factors that must be actively managed long after deployment.

For instance, when Suncoast Performance, a specialist in high-performance transmissions and technical parts, faced support load issues, they initially saw a familiar pattern. Highly skilled technicians were repeatedly pulled off core work to answer the same routine customer queries because answers were buried in manuals, scattered documentation, and web pages. This created delays, longer hold times, and an overburdened support staff.

Also Read: The AI-enhanced customer experience

RTS Labs helped Suncoast by implementing AI automation as an integrated system:

- Connecting the conversational AI to Suncoast’s knowledge base so answers came directly from trusted, internal technical documents.

- Using retrieval-augmented generation (RAG) to pull precise information from manuals, specifications, and policies in real time.

- Providing fallback external search when internal sources lacked an answer, with transparent source links for trust and traceability.

- Embedding continuous feedback loops where users rated responses, enabling iterative tuning and ongoing improvement.

The outcome was systematic:

- Routine inquiries were resolved immediately without technician involvement.

- Highly skilled techs were freed to focus on complex work, boosting productive output rather than handling repetitive questions.

- Customer satisfaction improved through faster answers, shorter waits, and fewer callbacks.

True AI automation implementation looks exactly like this. It isn’t a chatbot bolt-on, but a system that connects data, context, execution, feedback, and governance to automate and deliver measurable operational impact rather than just deployed capability.

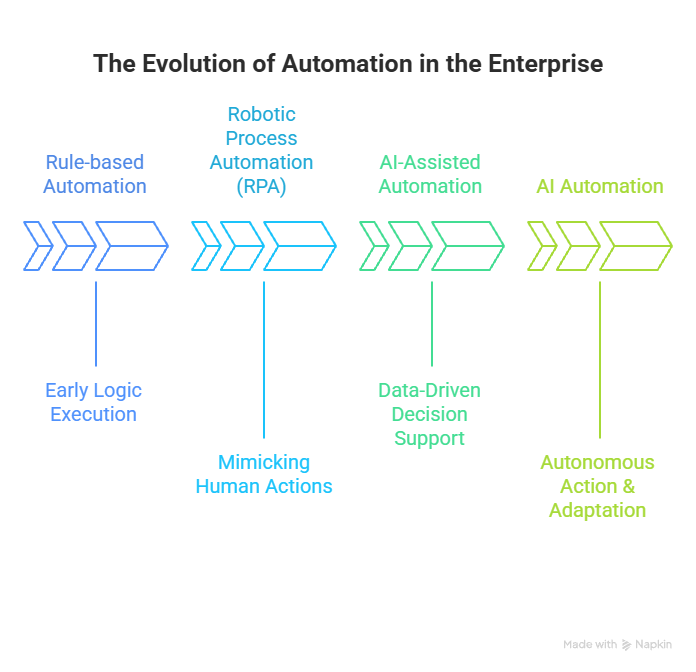

The Evolution of Automation in the Enterprise

Enterprise automation has progressed through distinct phases, each expanding what organizations can automate and how much judgment those systems can apply.

Phase 1: Rule-based Automation

Rule-based automation was the earliest stage. These systems executed predefined logic. If a condition was met, an action followed. While reliable, rule-based automation was rigid and broke down quickly when processes changed or exceptions appeared. Its value was limited to stable, repetitive tasks.

Phase 2: Robotic Process Automation

This evolved into Robotic Process Automation (RPA), which scaled rule execution across applications by mimicking human actions. RPA improved efficiency and throughput but remained fundamentally static. When inputs varied or workflows changed, bots required manual updates and human intervention.

Phase 3: AI-Assisted Automation

The next phase introduced AI-assisted automation, where machine learning models and analytics supported decision-making. These systems could classify, predict, and recommend actions based on data patterns, but they rarely executed decisions independently. Humans remained responsible for interpreting outputs and triggering downstream actions.

Current phase: AI Automation

Today, enterprises are moving toward AI automation, where systems learn from data, reason across context, and act autonomously within defined guardrails. These systems continuously adapt as conditions change, handle exceptions intelligently, and integrate directly into workflows to execute decisions at scale.

Core Components of AI Automation Implementation

AI automation implementation is fundamentally different from deploying traditional automation tools. Each stage requires a deeper integration of data, governance, and operating discipline, culminating in systems that do more than automate tasks.

A true AI automation implementation brings together multiple layers that must work in concert:

- Machine learning and predictive models that adapt to changing data patterns

- Generative AI and large language models that reason over unstructured information

- Agentic AI that can plan, execute, and coordinate actions across systems

- Data pipelines and enterprise integrations that ensure decisions are made on trusted, current information

When any one of these components is weak, especially data pipelines or governance, the automation may function technically but fail operationally.

TL;DR: AI Automation Implementation

- Successful AI automation connects models, data, workflows, feedback loops, and governance far beyond deploying a chatbot or bot.

- It goes beyond rules: Unlike RPA, AI automation operates in probabilistic environments, learns from data, handles exceptions, and acts autonomously within guardrails.

- Real value emerges when AI is embedded into enterprise data pipelines and workflows, enabling continuous adaptation and measurable business outcomes.

Why AI Automation Implementation Fails And How to Avoid It

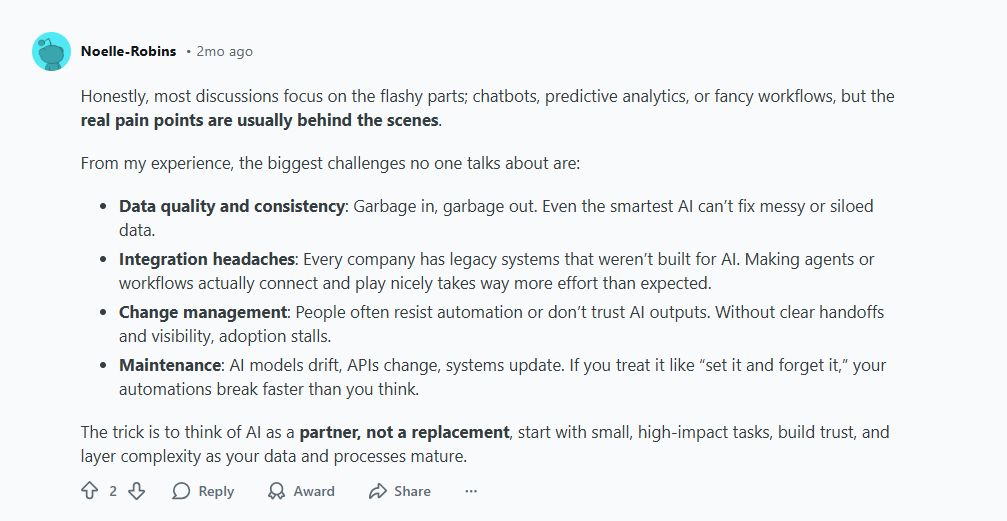

Most AI automation programs fail because the organization builds intelligence on top of instability. Reddit chats and tech discussion forums show that process design, data readiness, and governance are the most dominant failure drivers.

MIT surveyed 300 enterprises with reported automation implementations. The results were not only surprising but also revealed a clear divide. 95% enterprises reported their automation programs were yielding no returns, while the rest 5% extracted millions in value.

The reason for failure wasn’t model quality but the approach. Most implementations focused on enhancing the individual performance instead of improving the overall PnL productivity. The report said, “Most (projects) fail due to brittle workflows, lack of contextual learning, and misalignment with day-to-day operations.” Let’s discuss the reasons in detail:

Automating Broken Processes

Enterprises rush to apply AI to workflows that were never optimized, standardized, or fully understood. Automation amplifies whatever it touches. If a process is fragmented, AI will execute fragmentation faster, often at scale. Organizations that redesign processes before applying AI are significantly more likely to realize productivity and cost benefits than those that automate ‘as-is’ workflows.

Poor Data Quality

AI automation systems depend on continuous, trusted data flows. When AI automation is implemented without strong data governance, models drift, outputs degrade, and trust erodes quickly, especially among risk and compliance teams. Echoing the same, Gartner’s survey on 1,203 enterprises revealed how 60% would have to abandon their projects owing to the lack of data readiness.

Misunderstanding Human Oversight as a Blocker

Many organizations either over-automate, removing human judgment entirely, or under-automate, forcing humans to supervise every decision. Both approaches fail.

Human-in-the-loop controls are critical for maintaining explainability, accountability, and regulatory confidence in AI-driven systems. Enterprises that design escalation and review mechanisms from the start experience fewer operational incidents and faster adoption.

Tool-First Thinking

Enterprises select AI automation platforms based on feature checklists rather than business objectives. Organizations must begin their AI initiatives with clearly defined outcome metrics to report measurable ROI compared to those that start with technology selection.

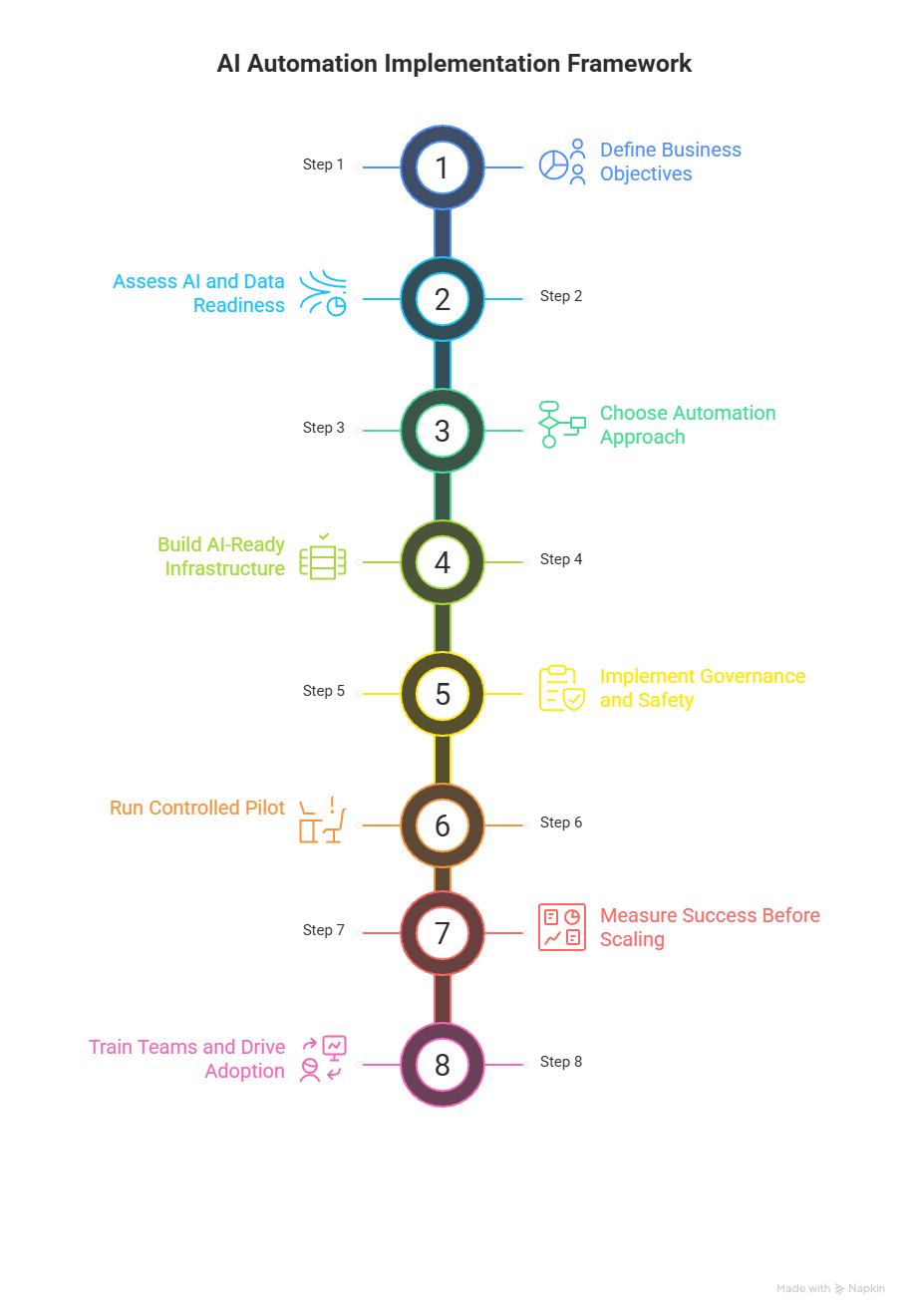

The AI Automation Implementation Framework: A Step-by-Step Guide

Successful AI automation implementation follows a disciplined progression. Skipping steps creates downstream risk that is expensive to unwind.

Here’s a step-by-step framework to move ahead with your AI automation journey:

Step 1: Define business-driven objectives

AI automation initiatives that start with abstract goals like ‘increase efficiency’ or ‘use more AI’ rarely scale. High-performing programs anchor automation to specific business outcomes, such as cycle time reduction, risk mitigation, margin improvement, or decision accuracy.

Step 2: Assess AI and Data Readiness

Data readiness is the single most important predictor of AI success, yet it remains underinvested relative to model development. Before models are built, enterprises must understand the condition of their data estate. This includes data availability, quality, lineage, and access controls.

Step 3: Choose the Right Automation Approach

Not every workflow requires autonomous AI. Some processes benefit from decision support, others from full agentic execution. Mature organizations segment workflows based on risk, complexity, and business impact, matching the level of automation accordingly. This avoids over-automation in sensitive areas while accelerating value elsewhere.

Step 4: Build AI-ready Infrastructure

Enterprises that fail to modernize integration and monitoring layers experience significantly higher AI operational incidents once automation moves into production. AI automation should be built on resilient pipelines, integration layers, observability, and security controls.

Step 5: Implement Governance and Safety

Governance should not be a compliance afterthought. Rather, it should be a scaling enabler. Clear model ownership, performance thresholds, auditability, and escalation paths allow AI automation to expand without increasing enterprise risk. Regulators increasingly expect these controls, especially in sectors like finance and healthcare.

Step 6: Run a Controlled Pilot

Pilots designed to test organizational readiness are far more likely to transition into production. Pilots should validate more than technical feasibility. They should test data flows, governance, user trust, and exception handling under real conditions.

Step 7: Measure Success Before Scaling

Scaling without measurement compounds risk. Enterprises should track KPIs such as decision accuracy, exception rates, cycle time reduction, cost savings, and human override frequency. McKinsey emphasizes that early measurement discipline is strongly correlated with long-term AI value realization.

Step 8: Train Teams and Drive Adoption

Organizations investing in workforce enablement alongside AI automation see higher sustained usage and lower reversion to manual processes. AI automation changes how people work. Without structured enablement, even technically sound systems face resistance.

Implementation partner RTS Labs, with its deep operational experience, helps enterprises navigate these steps pragmatically, accelerating progress without compromising safety or long-term value.

AI Automation vs. Traditional Automation

The most common reason AI automation initiatives fail is deceptively simple: organizations implement AI as if it were traditional automation.

RPA and rule-based automation excel at executing known processes under stable conditions. AI automation, by contrast, operates in environments defined by uncertainty, exceptions, and evolving inputs. Treating these two approaches as interchangeable creates structural failure.

In traditional automation, decision-making is explicit and static. Rules are written once and executed repeatedly. When conditions change, humans intervene to update logic. AI automation reverses this model. Decisions are data-driven and adaptive, with models continuously learning from new inputs.

This difference has significant enterprise implications.

Traditional automation scales linearly, i.e, more bots, more scripts, more maintenance. AI automation scales exponentially when implemented correctly, because systems improve as data accumulates. Organizations using AI-driven approaches see material gains in exception handling and end-to-end process efficiency, while RPA-heavy programs plateau quickly.

Exception handling illustrates the gap clearly. In RPA environments, exceptions trigger manual intervention, increasing operational load as volume grows. AI automation systems can classify, route, and resolve exceptions autonomously within defined confidence thresholds, escalating only when necessary. Enterprises begin to see real transformation rather than incremental efficiency.

Most importantly, the enterprise impact differs. Traditional automation improves task efficiency. AI automation reshapes how work flows across functions, enabling faster decisions, lower risk exposure, and more resilient operations.

When organizations fail to recognize this distinction, they design AI automation programs that look sophisticated on the surface but collapse under real-world complexity.

| Dimension | Traditional Automation (RPA / Rules) | AI Automation |

|---|---|---|

| Operating Environment | Stable, predictable processes | Dynamic, uncertain, exception-heavy |

| Decision Logic | Explicit, static rules | Data-driven, adaptive models |

| Change Handling | Manual rule updates by humans | Continuous learning from new data |

| Scalability | Linear (more bots = more maintenance) | Exponential (performance improves with data) |

| Exception Handling | Manual intervention required | Autonomous classification and resolution |

| Performance Over Time | Plateaus as complexity increases | Improves as systems learn |

| Enterprise Impact | Task-level efficiency gains | End-to-end process transformation |

| Risk & Resilience | Fragile under change | More resilient and adaptive |

| Common Failure Mode | N/A | Treated like traditional automation |

Hybrid AI Automation = Generative AI + Agentic AI

Most enterprises experimenting with AI automation start with generative AI. It’s visible, fast to pilot, and immediately useful for summarization, reasoning, and working with unstructured data. But generative AI alone does not automate work. It produces insight, but it isn’t meant for action.

Also Read: Agentic AI vs AI Agents: Key Differences and Enterprise Use Cases

Here’s how hybrid AI automation can help:

Automates Entire Workflows

Hybrid AI automation combines generative AI for cognition with agentic AI for execution. Together, they form systems that can understand context, make decisions, and carry out actions across enterprise workflows.

Handles Complexity With Context

Generative AI excels at interpreting complexity. It can analyze contracts, extract meaning from documents, reason over policies, or synthesize recommendations from large volumes of data. Agentic AI takes the next step. It plans tasks, triggers workflows, interacts with systems, and coordinates actions based on defined objectives and constraints.

A practical example makes this concrete. Generative AI reviews a set of supplier contracts, identifies clauses that violate procurement policy, and explains the risk. Agentic AI then routes approvals, updates records in ERP systems, notifies stakeholders, and logs decisions for audit, without manual intervention unless confidence thresholds are breached.

Gives Measurable ROI Outcomes and Reduces Risk

End-to-end automation, where decision-making and execution are tightly coupled, delivers significantly higher productivity gains than partial automation approaches. Exception handling improves, cycle times compress, and dependency on manual oversight decreases.

Enterprises are increasingly gravitating toward this hybrid model as it also reduces risk while increasing automation depth.

Better Governance

Hybrid AI automation aligns better with enterprise governance expectations. Generative AI provides explainability and traceability in decision logic, while agentic systems operate within clearly defined guardrails. This separation of reasoning and action makes it easier for risk, compliance, and audit teams to validate outcomes without slowing innovation.

Achievable Scalability

For enterprises serious about scaling AI automation, hybrid models are becoming the default because they reflect how complex organizations actually operate.

AI Automation Platforms vs. Custom Implementation

As AI automation matures, enterprises face a strategic decision: whether to adopt prebuilt platforms or invest in custom AI automation tailored to their operating model. This choice has long-term implications that extend far beyond initial deployment speed.

Prebuilt AI automation

Prebuilt AI automation platforms offer a clear advantage early on. They accelerate experimentation, reduce setup effort, and provide packaged integrations. For teams validating use cases or running limited-scope automation, this speed can be valuable. However, platform-led automation often plateaus once enterprises attempt to scale across complex, cross-functional workflows:

Lack of Flexibility

Prebuilt platforms are designed for broad applicability, not deep alignment with enterprise-specific processes, data models, or governance requirements. As automation expands, organizations encounter customization limits that force workarounds, introducing fragility and hidden operational cost.

No Data ownership

Many platforms abstract data and decision logic into vendor-controlled layers. Over time, this limits transparency, complicates audits, and constrains model evolution. In regulated environments, this can become a material risk.

Custom AI Automation

Custom AI automation implementations take a different path.

They are slower to start but designed to scale deliberately.

Enterprises retain control over data pipelines, models, and governance frameworks.

Automation logic aligns directly with internal processes rather than adapting processes to tool constraints.

Over time, this approach supports higher ROI because systems evolve with the business instead of being replaced or heavily retrofitted.

The distinction becomes most visible in long-term value realization. Organizations investing in custom AI systems built around their data, workflows, and risk profiles are more likely to sustain AI-driven performance gains beyond the initial deployment phase.

Also Read: Off-the-Shelf vs Custom AI Solutions: Which Fits Your Business?

Experienced implementation partners like RTS Labs make a measurable difference here. Teams that design AI automation with enterprise architecture, governance, and change management in mind help organizations avoid short-term wins that lead to long-term limitations.

| Dimension | Prebuilt AI Automation Platforms | Custom AI Automation Implementation |

|---|---|---|

| Time to Deploy | Fast pilots and quick experimentation | Slower start, deliberate design |

| Flexibility | Limited to platform capabilities | Fully aligned to enterprise processes |

| Data Ownership | Often abstracted or vendor-controlled | Full ownership and transparency |

| Scalability | Plateaus with complex workflows | Designed to scale across functions |

| Governance and Compliance | Constrained by vendor rules | Built to enterprise risk profiles |

| Long-Term ROI | Early wins, diminishing returns | Sustained, compounding impact |

| System Evolution | Dependent on the vendor roadmap | Evolves with business strategy |

How RTS Labs Enables Successful AI Automation Implementation

At enterprise scale, AI automation success is not defined by how quickly systems are deployed. It is defined by whether automation continues to deliver economic value without increasing operational or regulatory risk.

RTS Labs approaches AI automation implementation with this long-term lens. Rather than optimizing for speed alone, the focus is on sustainable unit economics, i.e., how automation behaves as volume grows, regulations evolve, and business priorities shift.

From an economic standpoint, this means designing automation that reduces cost per decision over time. Custom AI automation allows enterprises to avoid per-action licensing traps, minimize rework caused by brittle integrations, and reuse models and orchestration logic across multiple workflows.

We treat risk management as a value enabler, not a constraint. As regulators increase scrutiny around AI explainability, accountability, and data usage, enterprises without embedded governance face mounting friction.

RTS Labs embeds governance directly into the automation lifecycle. This includes clear model ownership, auditable decision trails, performance monitoring, and human-in-the-loop controls aligned to risk thresholds. These mechanisms reduce operational incidents while increasing confidence among legal, compliance, and audit stakeholders.

Our custom implementations, designed with modular architectures, allow enterprises to update models, adjust policies, and refine workflows without destabilizing production systems.

Ultimately, successful AI automation implementation is less about tools and more about operating discipline. RTS Labs brings experience across strategy, data engineering, machine learning, and enterprise integration to help organizations move from experimentation to execution, without sacrificing control, trust, or long-term value.

For enterprises ready to move beyond fragmented automation and into decision-driven operations, the difference lies in how AI is implemented, governed, and evolved. Begin your automation journey with RTS Labs today!

FAQs

1. What does AI automation implementation actually involve?

AI automation implementation involves designing, deploying, and governing AI-driven systems that automate decisions and workflows. It requires alignment across business objectives, data readiness, infrastructure, governance, and workforce adoption.

2. Why do most AI automation initiatives fail to scale?

Most initiatives fail because enterprises treat AI automation like traditional automation. Common issues include automating broken processes, poor data quality, lack of governance, and tool-first decision-making instead of outcome-driven design.

3. How is AI automation different from RPA?

RPA follows static, rule-based logic, while AI automation uses data-driven models that learn and adapt. AI automation handles uncertainty, exceptions, and decision-making at scale, whereas RPA is limited to predefined tasks.

4. Should enterprises use AI automation platforms or build custom solutions?

Platforms can accelerate early experimentation, but custom AI automation typically delivers greater flexibility, governance, data ownership, and long-term ROI even for complex, regulated, or cross-functional workflows.

5. How do enterprises measure success in AI automation implementation?

Success should be measured using business and operational KPIs such as decision accuracy, exception rates, cycle time reduction, cost savings, human override frequency, and risk exposure.